Three-quarters designer, and one-quarter front-end developer, with 2 years experience in UX/UI design and front-end development, and 3 years of working experience as a project manager for innovative design projects.

I’m a problem-solver breaking down complexity to deliver simple but efficient user experience; I'm a team player, thriving on collaborative and creative jobs, work well with cross-functional teams by effective communication and shared understanding.

With the User-Centered Design skills I developed from the experience in handling complex enterprise applications UX/UI design and delivering simple yet customizable, efficient and scalable user experience, my Master Degree of Human-Computer Interaction + Design at UW, as well as my passions in technology, art, and people, I’m competent to design delightful user experiences.

Please check my RESUME for more about me.

Tools that I love, Sketch, Arduino, HTML+CSS+JavaScript, Form/QC, Framer.js, Adobe Creative Cloud, Pen+Notebook

Thank you for visiting my portfolio site, if you'd like to chat with me, let's grab a cup of coffee somewhere in New York, contact me via zhenxi.mi[at]gmail.com

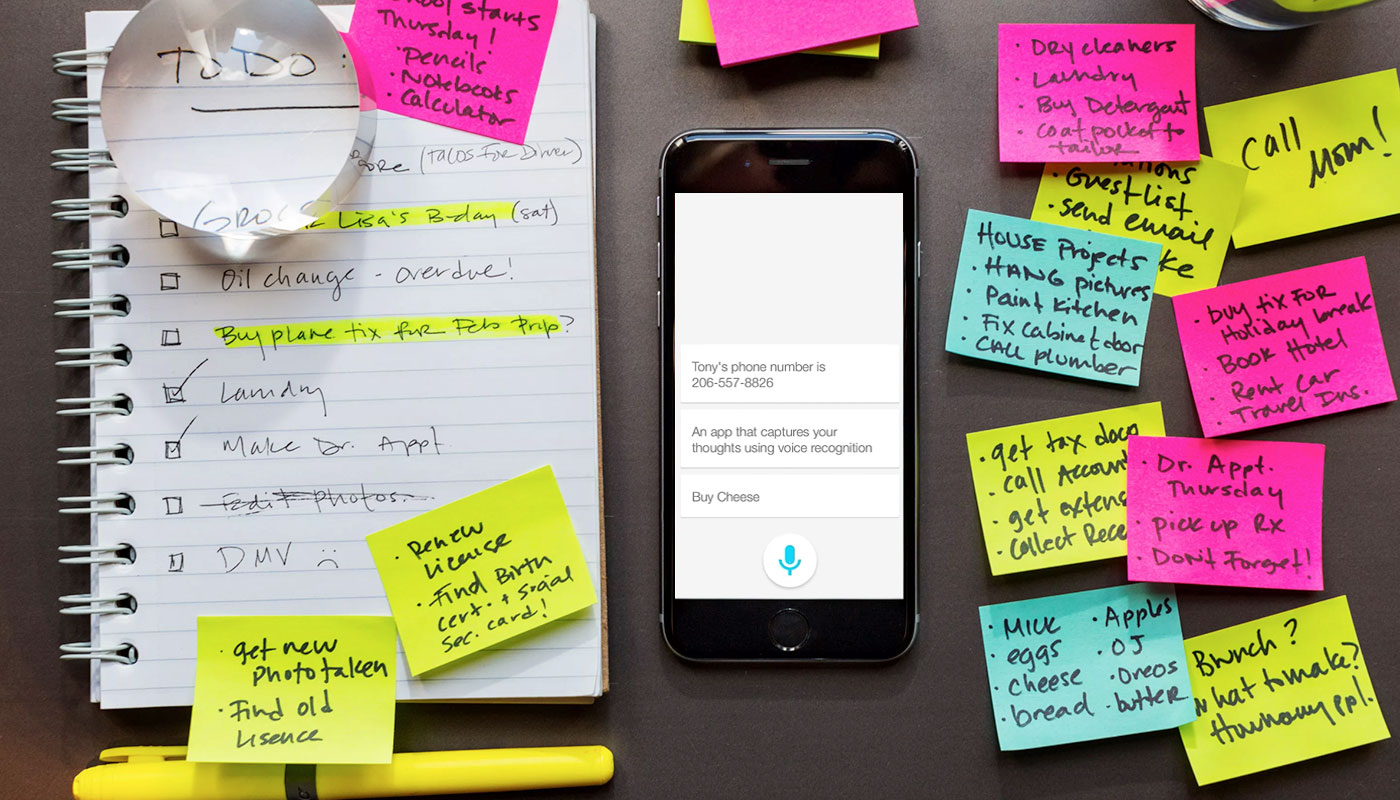

With Tapture we are focusing on the experience of taking notes with mobile devices, which would be a substitute of analog note taking measure such as a pen and a piece of paper.

This project was selected as one of the 6 finalists for the 2015 Shobe Startup Prize competition from HCDE Department at University of Washington.

We learned that many people prefer taking notes by using pens and paper than using the apps on their phones because pens and paper is quick and easy. And our secondary research and competitive analysis also show that the existing note-taking apps in the market are complicated and less intuitive to use, and these are the main factors which stop the users from using it as the primary tool.

Since Apple's smartwatch debut on the September Event 2014, there is trend of seeking for the killer app for Apple Watch. Therefore, we would like to try to design an interaction that can be easily transferred into Apple Watch app.

Team:

Year:

My Role:

Design a mobile/wearable non-typing quick note taking modality with a simple and intuitive interaction.

Created a mobile app prototype in high fidelity with Form(a prototyping tool from Google), the prototype demonstrated our concept of combining 1-tap interaction and Nuance Dragon Dictation, in order to design a voice activated and transcribed note taking app.

To investigate our design topic, we first conducted a few interviews with our potential users who take digital notes frequently with their mobile devices, in order to gain more insights of the existing note taking products.

We learned that,

iOS Note app takes 6 steps to take a note

Simple interaction and voice dictation will create a smooth and efficient user experience for taking notes with mobile and wearable devices.

User Persona

Based on the insights we got from our research, we carried out a number of brainstorming sessions to explore UI variations and possible interactions. Our idea started with designing a to-do list style, however, it is not fully compatible with note-taking tasks, I sketched the UI and decided to keep the listing style in the following design. Because it is very easy to create and manage new lines. We also adopted the new Material Design style from Google as our general design guideline, since the concept of cards supports our pursuit of simple interactions.

According to Fitts’ Law, we finally decided to design big button at fixed position at bottom-center for adding new notes, the mic icon on the button matches the interaction as well, so user can access with only one hand, and even without looking at the screen after a few practice.

Evolution of the UI design

Refinement of the UI and interactions

BWe had a few different ideas for the inputting interaction, tap to record and press-hold to record, drop-down menu to delete or share the note and sliding left to delete or sliding right to share, as well as the order of the listing whether chronically ascending or descending. We couldn't represent our target users even we are four person, so we planned to ask these questions during our user testing.We conducted a usability testing incorporated with R.I.T.E(Rapid Iterative Testing and Evaluation) methods.

In order to achieve testing, we created low-fi paper prototypes.We provided the participants several scenarios where Tapture could be used, and asked them to walk-through the interface via thinking out loud. We learned that people are very used to sliding interaction, majority of the participants tend to do so when they saw the cards, and the press-hold interaction is more time efficient.

Besides, we heard users’ concerns of the accuracy of the voice dictation. We decided to look into existing voice technologies regarding to their accuracy.

Paper Prototype

After looking at the technologies, we learned that the best speech-to-text engine is Dragon Dictation from Nuance, it also powers Siri (iPhone personal assistance via voice commands). It’s the most accurate product that we can find on the existing market, and it provides free trial api for testing purpose. It claims 99% accuracy, however, the 1% may ruin the whole product if we didn't come up with an solution. As the result of our discussion, we decide to record the audio at a recognizable low quality as a back up. So if users want to verify the notes, the audio will take them back in time to review the context when they were taking the note.

Press & Hold to start

Slide left to cancel

Slide right to search

New card added

Tap to play audio note

Audio note playing

In order to further test our interaction model, we built a high-fidelity interactive prototype with Form (A Google prototyping tool similar to Quartz Composer). Besides, our engineer made a working technical prototype with Dragon Dictation api, Evernote api and Xcode.

Interactive Prototyping with Form

According to the research and statistics, in the US, there is average 1 teen death every day caused cell phone use while driving. Many states made specific laws to ban various cell phone uses. And the main mobile phone carriers and big phone manufacturers have developed their own mobile applications to stop drivers from using their cell phones. Our secondary research showed that law didn’t work well, and the carriers’ applications caused many inconvenience to the users.

Our Focus

A text from a sender to a driver involves many different parts. The person uses their phone to send the text that is processed by a cell service, then to the phone of the driver who is in their car. In a competitive analysis, products all intervene in areas other than the original sender and their phone. These products, though numerous and varying, fail to make a large impact on the number of drivers that text on the road. Drivers feel annoyed and frustrated by the way products may intervene; and in fact, some products can even add more cognitive load to the driving experience than take load away.

We found out a pattern that all the existing products or solutions are trying to put the every bit of the responsibility on the driver (recipient), so we took a step back to look at the whole life cycle of a text message, seeking for a different perspective to analyse the problem. We noticed that there was a blank field on the sender side. We asked ourselves, if putting all the responsibility on the driver to be safe isn’t working, then what will? Clearly texting involves two parties, not just one. So we started focusing on the original sender and their phone, where there has yet to be any attempted interventions.

Nowadays, as we are more connected than ever before via variety of mobile devices, many people tend to be very reluctant to be disconnected. The AAA Foundation found that texting increases the likelihood of an accident by 23x and that talking or listening to someone increases that likelihood by 1.3x. So, we are looking for solutions incorporating with technologies and providing an efficient way of mitigating text while driving.

We designed an OS level feature for mobile phones called “Drive Mode”, similar to the existing airplane mode, which should be turned on in designated scenarios. Drive Mode uses the concept of social signaling, and it provides the recipient contextual information to the sender. Our evaluation research proved that the sender wouldn’t text the recipient, if they were aware of the recipient was driving.

We did vast amount of secondary research, trying to understand the underlying human behaviours and the prevalent social norm of interpersonal communication in the digital age.

We dived into both secondary research and primary research with two main questions in our minds:

On ustwo’s official blog, there are a series of five blogs talking about the future design for in-car HMI. They have done a large number of research on the information in car and the interface that manages the cluster of the rich information. In addition, they are also trying to bring UCD thinking into their solutions and suggestions, in order to envision the future user experience in our cars.

We contacted with the auto team at ustwo and scheduled a weekly Internet meeting with the team. We got very helpful design mentor from ustwo London

Dr. Linda Ng Boyle

During our secondary research one source of several papers is Dr. Linda Ng Boyle, a professor here at the University of Washington (UW), who is in charge of the Human Factors and Statistical Modeling Lab. In order to get richer knowledge on this subject, we reached out to Dr. Linda Ng Boyle and conducted a SME(Subject Matter Expert) interview with her, the finding helped us to refine our research scope as well as the research questions within that scope, and direct our secondary research towards the right resource.

Our team was lucky enough to get a tour of Boyle’s lab and get her input on how to test and research distracted driving. Her lab has a simulator that includes a car seat, pedals for gas and brake, a digital dashboard, and a stand that can hold a small tablet to simulate tasks or a GPS on. She made a comment that sometimes drivers automatically go for a seatbelt and it would be interesting to put a seatbelt on the seat.

Car Simulator

We carried out a few brainstorming sessions with internal team and sponsor team, attempting to map out the whole spectrum of the problem space. Each of us did a parallel design process on the possible solutions for reducing text while driving. We flashed out many interesting ideas from each step of the text message being transferred. We explored social shaming, rear mirror display, adaptive UI, and social signaling, etc.

In order to search for possible solutions, we sketched out different scenarios that those modalities we brainstormed can be used. It was very helpful for us to think out of the box before we narrow down our focus.

After more secondary research and technology verification discussions, we narrowed down our focus. We decided to start to implement our idea on top of the messaging application iMessage on iOS, because iPhone is widely used, we can follow iOS design guidelines to design our interface, since we have a time constraint. In addition, our system will utilize the BlueTooth connect between the car and the phone to detect the drivers' status.

Before we dive into the details of our design, we conducted our first round usability research. The research adopted the approaches of one-on-one interview and cognitive walk-through. We set up a task that asked the participant to send a text message to a person who is driving. There are two different scenarios for the participant to walk us through, we asked them to think out of loud. Through the research, we attempted to answer two main questions: firstly, if the sender was aware of the context of the recipient who was driving, would the status change their texting behavior? And secondly, do the drivers mind if their status of driving may be shared with the sender?

Usability testing with paper prototype

Usability testing demographics

The result was showing a set of very promising data that supported our hypotheses. 19 out of 20 participants said the contextual status of the recipient was driving would change their texting behavior. And all the participants expressed that they would mind if the system would show their status of driving as long as it wouldn’t tell where they were.

"It indicates it's not a good time to text him."

Quote from participant

"I'm gonna text him anyway since the message will be deferred."

Quote from participant

The second round research was conducted in the car simulation lab run by Dr. Linda Boyle, to see both what users say and what they do. The participants were ask to accomplish tasks by interacting with our design while driving the car simulated in real life scenario. We developed an interactive prototype that users can try out on a prepared mobile device. In addition, we also curated a behavior prototype for the testing, the participants interacted with a voice command system which we created by Wizard of Oz.

Usability testing in car simulator

We were challenged on Inclusive Design for a 10 weeks project through the course: Visual Storytelling, Microsoft Expo. Inspired by a video on YouTube called How a Blind Person Grocery Shops, my team had a very strong interest in improving the accessibility of grocery shopping. We learned from interviews that people would like to use the existing on-line services to assist their grocery shopping when they are temporarily less capable to do shopping by themselves. However, they also complained that the quality of the grocery was not guaranteed. In addition, we learned that some local communities offer help to the neighbours.

Shooting Video for Prototype

Year:

2015 (10 weeks)

My Role:

Design an inclusive grocery shopping experience by leverage the crowd-source from the local community in order to improve the accessibility to fresh groceries for permanent/temporarily disabled population.

We handed in the final deliverable with a video prototype that demonstrated the user experience of our design as well as wire-frames and interactive prototypes.

The project was tasked with the 2015 Microsoft Design Expo challenge--inclusive design, not a specific problem to tackle. Therefore, we have started with a theme or a problem space. In order to find a direct to get started, we need to make sure we have a clear understanding of what inclusive design is. We tried very hard to scope it by listing all kinds of disabilities: temporary disabilities, permanent disabilities, and situational disabilities.

Exploring inclusive design

Narrowing down our scope

With the scope we concluded, we crowd-sourced themes and problem that the disabilities are facing, from there we searched for emerging technologies and appropriate solutions to improve the accessibilities in those scenarios. According to our own interests, we gathered in groups and started our journey of an inclusive design. Our group was interested in designing better grocery shopping experience for people who have vision, mobility impairments, as well as elderlies.

We learned that grocery shelving was not designed for optimizing the shopper user experience, but to maximize the shopping time in the store.This insight made us think that what side effects were caused by this design theory. To figure it out, we conducted half-hour observation sessions in three different local grocery stores. We looked at the shopping experience in four main perspectives, in-store accessibilities (store settings, shelving and labeling, item location and sectioning), out-store accessibilities (on-line information, distance to residence, and transportation facility), shopper demographics and their shopping behaviors in the store.

What we observe

Competitive Analysis

We looked at the existing applications and service which are trying to alleviate the pains from the traditional grocery shopping. However, according to the result from our interviews with their current users, we found out that people thought these products are either a bit impersonal or hard to guarantee the grocery quality.

We had a number of brainstorming session to analysis users' pain-points, and collaborated to figure out the solution for better grocery shopping experience.

Due to the complexity of the grocery shopping experiences for different demographics, we have to start with a lean perspective and try to generalized afterwards. In this case, we firstly prioritized the possible target audiences, and chose elderlies as the main target.

The growing population of senior citizens urged us to think about how to bring more delightful experience in all aspects of their lives. In addition, most of them are not very technology savvy, so the considerations for the ease of understand and use are required.

User reviews are an ubiquitous part of the online consumer experience. From hotels to restaurants to blenders, the one-to-five stars and the thumbs-up and thumbs-down are Internet icons of customer satisfaction. Online customer reviews, says linguist Camilla Vásquez, are “an active and creative expression of what writers have the power to do.” (Vásquez, 2014)

In working with Premera Blue Cross, a Washington-based health insurance corporation, we learned that reviews are a consistent problem in tools meant to help users find medical providers.

Though as many as 59% of survey respondents report that they find online reviews helpful and view them as important to their choice of a medical provider (Hanauer et al., 2014), in current systems they are lacking in both availability and credibility.

There is a shortage of reviews for medical providers, and those reviews that do exist are almost exclusively positive.

Reviews can be improved by generating more reviews of higher quality via:

Simplifying reviews

Creating a flexible review platform

Increasing immediacy

Following up after multiple visits

Immediate and easy reviews incentivize users to give feedback. Once they have made a one-click review, they are then asked an optional contextual questions to elaborate on their opinion.

Patients become expert reviewers as they visit a provider more often. As they make more visits, their initial reviews are elaborated on by questions which require more experience with the doctor.